Artificial intelligence (AI) is no longer just a buzzword in tech circles. It has become an integral part of our daily lives, from the recommendation algorithms that suggest what we should buy to the chatbots that answer our customer service queries. But AI is not a new concept. It has been around for decades, and the first AI program was developed in the mid-1950s. In this article, we explore the history of AI, the development of the first AI program, and the implications of AI for society.

History of AI

The history of AI dates back to ancient Greece, where myths and stories of artificial beings were told. However, the modern concept of AI was developed in the mid-20th century, when scientists began exploring the possibility of creating machines that could think and learn like humans. The term “artificial intelligence” was first used in 1956 by John McCarthy, an American computer scientist, during a conference at Dartmouth College.

The First AI Program

The first AI program was developed by a group of scientists led by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, who were all working at Dartmouth College at the time. The program was called the Logic Theorist, and it was designed to mimic the problem-solving skills of a human being. The Logic Theorist was able to prove mathematical theorems by searching through a database of rules and making logical deductions.

The development of the Logic Theorist was a significant milestone in the history of AI. It demonstrated that machines could be programmed to perform tasks that previously required human intelligence. The Logic Theorist also paved the way for other AI programs, such as the General Problem Solver and the first expert system, which were developed in the following years.

Implications of AI

The development of AI has far-reaching implications for society. On the one hand, AI has the potential to solve some of the world’s most pressing problems, from climate change to healthcare. AI-powered technologies can improve efficiency, accuracy, and productivity in various industries, from manufacturing to finance.

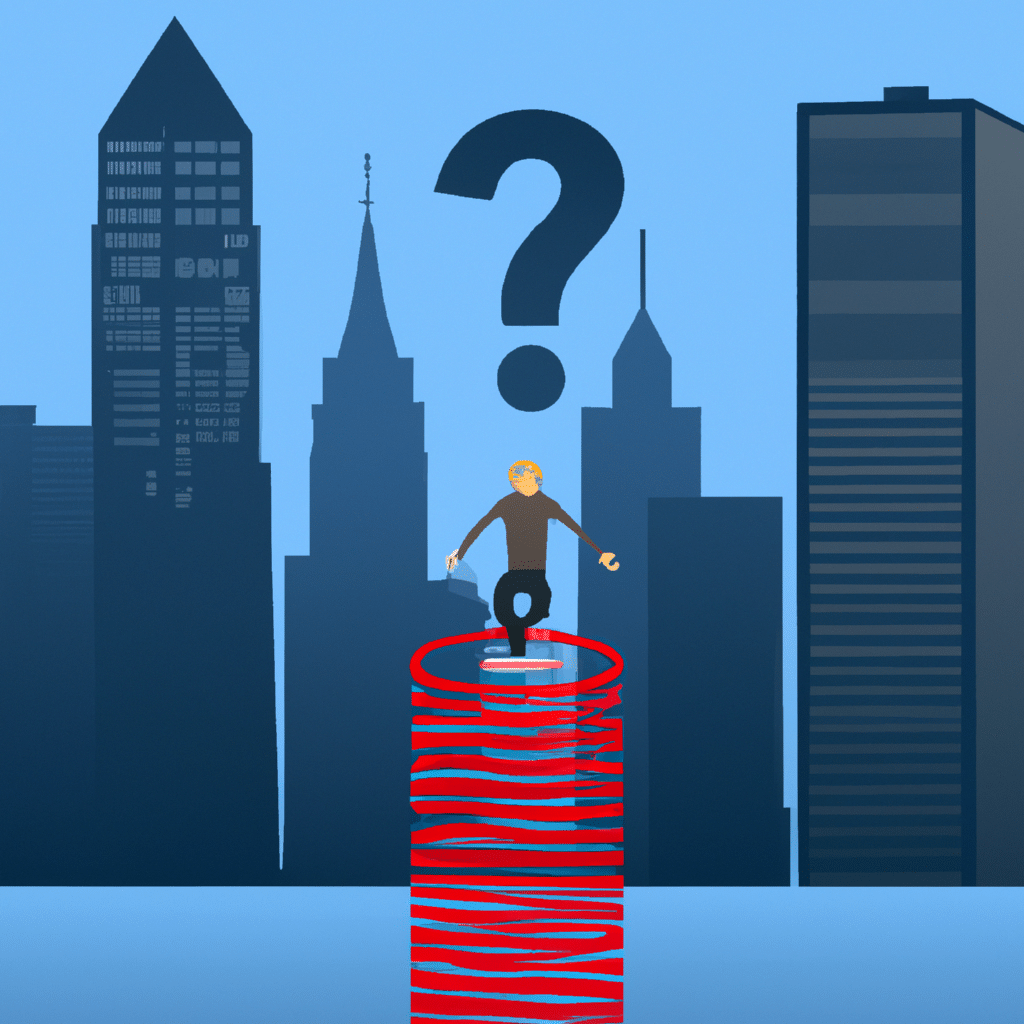

On the other hand, AI also poses significant risks and challenges. One of the biggest concerns is the impact of AI on employment. As machines become more intelligent and capable, they are likely to replace human workers in many industries. This could lead to widespread job displacement and unemployment, particularly for low-skilled workers.

Another concern is the potential misuse of AI by governments and corporations. AI-powered surveillance systems could be used to monitor and control citizens, while AI algorithms could be used to manipulate public opinion and exacerbate social inequalities.

Conclusion

The development of the first AI program was a significant milestone in the history of AI. It demonstrated that machines could be programmed to perform tasks that previously required human intelligence. Since then, AI has continued to evolve and become an integral part of our daily lives. While AI has the potential to solve many of the world’s problems, it also poses significant risks and challenges. As we continue to develop and deploy AI technologies, it is essential to consider their ethical, social, and economic implications carefully.